TL;DR

"Artificial Intelligence" is here (sort of), so what is it good for? Turns out, it's good for book cover design, editorial assistance, and quirky Christmas gifts. Hopefully not the harbinger of a creative and environmental apocalypse as some fear, but a potentially useful tool in the writer's utility belt, requiring a thoughtful, conscientious approach. Also, something about washing machine part badger art?

There has been, as I'm sure you're aware, a tremendous explosion in recent years in the availability and ability of new, so-called "artificial intelligence" tools. The rapid expansion of this newly viable technology has brought a tsunami of both passionate support and passionate opposition.

Since Twiki and Dr. Theopolis navigated the post-apocalyptic 25th century with Buck and Wilma, I've been interested in artificial intelligence, and have followed real-world research and explorations from the simplest AI pre-cursors like Eliza, to genetic algorithms and neural networks, to more advanced implementations like Deep Blue and Watson. Though I never had the resources, mentors, or programming prowess to experiment with it myself, I maintained a passive interest.

Really, none of what we're seeing today is actually new, it's just that computational capacity has finally caught up to what we've been trying to achieve for most of my lifetime.

AIrt and AImagination

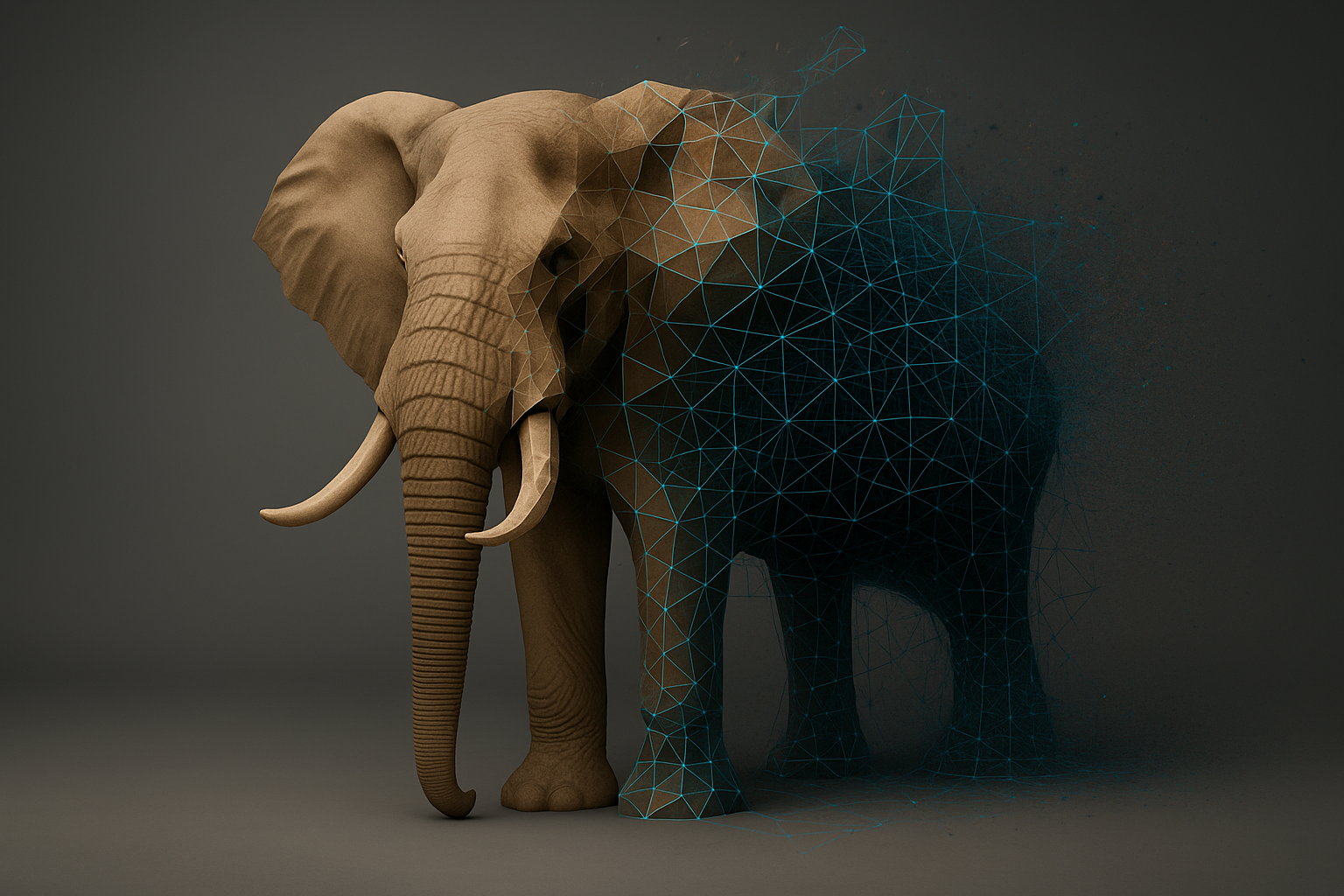

Interestingly, modern image generators seemed to precede the seemingly simpler language models, making quite a splash because of the psychedelic imagery they initially produced. When introduced, ChatGPT was little more than a quickly dismissed novelty, but Dall-E—released in 2021 a little before the release of Our Once Warm Earth—was more interesting.

When prepping that book's cover, I played with Dall-E and other diffusion models, but couldn't get them to produce anything usable. Had they been more capable, Our Once Warm Earth might have been among the first books released with AI-generated cover art.

I've since tried newer models on smaller projects, often with interesting results but varying success. So, when it came time to design the cover for Inheritance of Dust—knowing I can't afford to hire a designer—I wanted to put the current crop of image models through their paces.

None of them, it turns out, could yet produce a usable full cover image that met my aesthetic standards. I did, however, coerce a series of more focused image elements out of them, that I then combined in Photoshop for the final design.

Perhaps you noticed the mention on Inheritance of Dust's copyright page that the cover image was derived from AI-generated source material. This type of "AI usage notice" is a practice many authors are adopting in the interests of ethical transparency, and one I support. Some publishing and distribution services now require authors to disclose the role, if any, that AI has played in the creation of their works.

Words, Words...

As an author and a developer, I solve creative problems with language "all day, er' day." And solving complex language problems is basically the definition of a Large Language Model.

I've been dabbling with text-based generative models for almost two years now. Like the original ChatGPT, they started out as mostly toys, and one of my first AI-assisted projects was the creation of a fictional "private investigator" style dossier on the family to accompany a Christmas gift of tickets to "Clue the Musical." The stories were weird, funny, and a big hit. What would have taken me days to write, the model had written "well enough" in under 20 minutes.

Since then I've used chat-style models for personal research (such as feeding it my entire corpus of old blog posts and having it analyze and answer questions about me), as a brainstorming partner, as a critical first-reader, and, most recently, as a personal—and literally virtual—author's assistant.

I fed the entirety of Our Once Warm Earth and Inheritance of Dust into Google's NotebookLM and used it extensively as an editorial assistant the last couple of months. Where my editorial notes were nebulous or wide-ranging, it helped identify specific areas where I could apply them. It found in minutes what would have required hours of re-reading myself, allowing me to complete my edits months earlier than I otherwise might have.

Now, LLMs are integrated into nearly every tool that I use every day, from the grammar checkers to the code editors. They're in my phone, on my TV, and in my smart home (another sci-fi fantasy realized). They have transformed the work that I do; and, at least as an experienced developer (20+ years!), I find them to be fantastically useful.

But, but...

All of this progress hasn't come without resistance, and understandably so, especially as big tech implementations become hungrier and hungrier. Making all those electrons jump through hoops requires energy and generates heat, and the one thing we need less of right now is heat, am I right? (For future historians' context, we are currently in the middle of one of the hottest summers in my memory.)

Aside from the technological and climatological concerns, there has been a lot of (also very understandable) teeth gnashing and clothes rending over how these big artificial "brains" have been fed. Countless reports have suggested—and direct admissions confirmed—that some of the most popular models have been trained on copyrighted works. This can be demonstrably bad when their output clearly plagiarizes their sources, perhaps doubly so with visual art. Personal artistic style, especially in visual media, is hard fought and hard won, highly subjective, and difficult to reproduce—unless you're an art robot.

On the flip side, all of us are the product of what we've taken in over our lives, and I would challenge any creative person who claimed to have produced their art entirely without external influence. The difference (well, A difference) between us creative meat sacks and the undying art robots is a question of effort and scale. Van Gogh may have created more than 2,000 individual pieces of art in his lifetime (if Wikipedia is to be believed), but one of our modern art robots could do that—DOES do that—in a few weeks, if not days.

Art may be in the eye of the beholder, but I would suggest that the value of art derives from the conditions of its genesis, from how it is mined from the passionate, squishy, self-questioning of lived human experience, and from the myriad, ineffable responses it brings up in those who appreciate it. Art isn't just an image, a paragraph, or a loose collection of used washing machine parts assembled into something resembling a badger. Art is that feeling you get when you witness a beautiful sunrise, or touch your lover's cheek, or squish your toes in the muddy bank of a burbling stream; but, y'know, from something hung on the wall in front of you, or held in your hands, or staring at you suspiciously from a corner with its chromed knob eyes.

Art is how a perfectly sensible blog post goes completely off the rails and turns into a rant about washing machine badger art.

Loop closed.

Reality sets in...

SO, how am I using AI? Some of it I've covered, but what it boils down to is this: AI is a tool that, like any tool, can be abused or used for evil in the wrong hands.

For me it's a hammer that breaks through the crust of the blank page, a lens that helps me focus when I know I want to do something, but I'm paralyzed by all the somethings I could possibly do. It is a database of me and my writing (this really is THE killer feature, for me)—infinitely searchable using complex, inscrutable criteria. It's an amusing toy that writes funny announcements on demand to play over the "smart" speakers in my house. It is, quite often, the thing that shows me what I don't want, so that I can think more clearly about what I do want.

It will help the inept visual artist in me create images for small projects that won't pay for themselves, while supporting real artists and designers when I can afford to.

I don't and won't use it to write for me, or to copy someone else's written or visual style, but I will use it as a critique partner.

When possible, I'll use it locally, on my own hardware, so I'm not contributing to the ecological decline of northern Arkansas, or wherever Bezmusuckertron builds their next AI mega nexus.

"AI" is a tool that I intend to use in full awareness of its flaws and implications, as conscientiously and as wisely as I can.

Wrap up...

I'm realizing, about 800 words beyond my initial target for this post, that I only barely touched on the whole copyright/opensource angle I had in mind. Maybe another time.

Until then, what's your take on all this AI malarkey? Love it, hate it, believe that it heralds the imminent rise of sentient cyberlords bent on world domination and our eventual total annihilation? Or, perhaps more rationally, that we should ix-nay on the IA-ay and figure out how to address our climate crisis and preserve our dwindling freshwater resources before we turn our planet into Venus 2?

Let me hear it!

Crew Comments